I explore Model Driven Architecture (MDA). This approach uses modeling for programming. Models drive the generation of code according to patterns.

We tend to write a lot of similar code over and over. If you have ever written code to persist a Java object into a relational database table, you know what I mean. It is similar for every object. Although the characters you type are different, there is a definite pattern, and you know deep down that an intelligent person should not have to endure such tedium day in and day out. The same goes for a lot of user interface code, like data entry forms. The same code gets written again and again; only the names are different.

I believe very strongly that model driven architecture is the way of the future for software development. Objects led to interfaces. Then, collaborations. Then, patterns and pattern languages (families of patterns). Model driven architecture is a natural extension of this trend. After identifying a pattern language, it should be possible to instantiate those patterns (write a software program) by expressing that intent through modeling. Modeling in the abstract sense is the act of capturing concepts in a notation.

I consider programming in Java to be a form of modeling. A programming language is not a very compact modeling language. Programming languages are designed to have a lot of redundancy to allow for error checking. Patterns in the code also indicate a lot of information that could be expressed in a more compact form. I view MDA as expressing software concepts in a very compact form (the model).

I think we need an example. I could write a program to represent pictures formed from geometric shapes. The software itself would probably require thousands of lines of code to perform the editing, rendering, and other functions. However, the application is really only dealing with a few basic concepts: ellipses, polygons, line segments, and a few others.

It should be possible to model each shape with a minimum number of parameters; e.g., an ellipse would be modeled by three points. In this manner, we could express any diagram understood by this application very compactly in a modeling language that captures the parameterization of shapes.

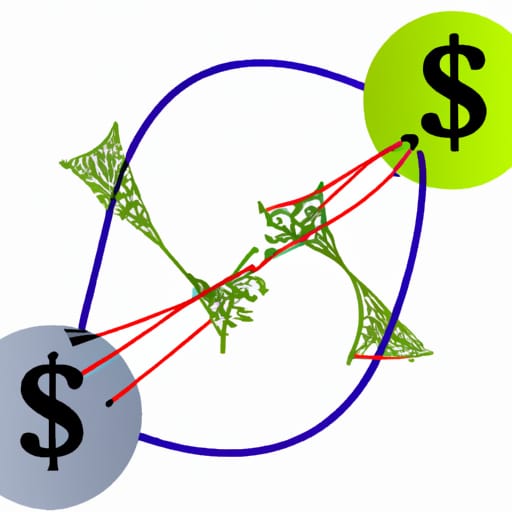

Now, let’s take that graphics package and reuse it as the foundation for constructing an application that renders telecommunications networks. This application deals with concepts like location, network elements, ports, and connections. Each concept is rendered with symbols, which are merely recurring patterns of our familiar geometric shapes.

The parameterization of shapes is no longer the most appropriate modeling language for capturing the essential concepts of this application. A more compact representation can be achieve by parameterizing the concepts that are directly understood by the application. This is the essence of model driven architecture.

Not everyone views MDA as I do. Actually, I have yet to encounter anyone who has expressed it as simply as that. Most proponents of MDA ramble on about UML and MOF and platform independence and all kinds of other non-essential mumbo jumbo. The key idea that is lost among the OMG UML/MOF folks is the notion of unit economy in the theory of concepts; that is the need to represent concepts in the most compact form. This is necessary within the mind, and this need is reflected in language.

UML makes perfect sense as a language for representing analysis and designs for software development. However, when one is looking to model concepts within any other context, the absurd verbosity and complexity exhibited by UML/MOF make as much sense as using geometric shapes as the modeling language for telecommunications networks.