Modern physics is fundamentally broken. Quantum theory explains how the universe works at small scales, such as atoms. Quantum theory is remarkably accurate with an incredibly good agreement with experiments. The theory of general relativity explains how the universe works at large scales, such as stars and galaxies. General relativity is remarkably accurate with an incredibly good agreement with experiments. However, the two theories disagree with each other. Both theories are mostly correct, but also wrong in some ways. After decades of trying to reconcile the two theories of the universe, physicists are not close to formulating a theory of everything. There are several promising avenues of research, such as string theory and loop quantum gravity, but none of these has so far been successful.

It makes me wonder about where the error in thinking could be to have misled physicists throughout the world onto roads that are probably dead ends.

It’s been pretty obvious to many where the flaw in quantum theory lies. It treats the time dimension as being independent from the three space dimensions, and this is in complete contradiction to general relativity, which states that space and time form a single manifold. Quantum theory treats space and time as an absolute background, as if they exist independently from the particles and fields that make up reality. However, only particles and fields are real. Space and time as a background do not actually exist; they are mathematical constructs reflecting the geometry of some physical theory.

However, where is general relativity flawed? This is not as obvious. The most obvious conflict with quantum theory has always been gravity, which general relativity claims is the curvature of space-time. Quantum theory has never had a good explanation of gravity. The source of gravity is mass-energy.

Something else we should be aware of is the Standard Model of particle physics. It too represents our current best understanding of the universe. It too is flawed. It does not include gravity among other phenomena. And interestingly enough it predicts a particle called the Higgs boson, which is responsible for mass. However, the Higgs boson has never been experimentally observed. The Large Hadron Collider (LHC) is supposed to confirm or refute the existence of the Higgs boson, when it becomes operational.

Maybe this is more than just a coincidence that the Standard Model, which does not include gravity also predicts the origin of mass, which has eluded observation. Given that gravity is where the conflict lies between quantum theory and general relativity, perhaps we should take a closer look at mass-energy.

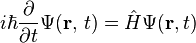

Let’s take a look at the Schrödinger equation from quantum theory:

The H is the Hamiltonian, which is the total energy of the system (potential + kinetic). The kinetic energy is proportional to mass. General relativity tells us that mass is equivalent to a whole lot of potential energy:E = mc2.

Meanwhile, special relativity tells us that mass is relative. The mass of something depends on how fast it is moving relative to the observer. The observer and the relative motion are other things that the Schrödinger equation does not account for.

We keep encountering mass in these equations. Maybe we don’t understand mass as well as we think we do. Which leads us to a misunderstanding of gravity, and consequently the geometry of space-time. I wonder if all of this points to mass as being the culprit, where we have been getting it all wrong. Mass is where I suspect the problem to be. If the LHC does not find the Higgs boson, I think this will tell us just how wrong we are about mass.